As I continue to explore the realm of Artificial Neural Networks (ANN) I keep learning about some really cool types of neural networks which don’t get much attention these days. Many of these networks have not found much practical use in the “real world” for various reasons. Nevertheless, I find learning about them in my free time somewhat stimulating as they often make me think of some problems from a different perspective. One such ANN is called Hopfield Network (a.k.a. Hopfield net), named after its inventor John Hopfield.

In this post I will provide a summary of Hopfield network features which I find the most interesting. I tend to hack on the stuff I learn about, so this post will also introduce my implementation of Hopfield networks in Go which I’ve called gopfield. I used Go again, as I feel comfortable coding in it at the moment, at least until I get a better grasp of TensorFlow. If you find any mistakes in the post or if you find the post interesting don’t hesitate to leave a comment at the end! Let’s get started!

Human memory

Let’s talk about human memory first since Hopfield network models some of its functions. Human memory is an incredibly fascinating and complex thing. When I think about it I often think of the story by Borges called Funes the Memorious. It is a story of man who remembers everything in a crazy amount of detail, a condition also known as Hyperthymesia. Remembering everything we have lived through in so much detail must be ridiculously energy expensive – at least when it comes to recalling stuff from memory. Querying the memory would require some serious “mining” through a tremendous amount of data stored in it.

Most of the people seem to remember mostly the “core features” of the things they experience. We often forget and/or remember things differently compared to how they actually looked or happened. With the help of the brain [neurons], we can assemble experiences from the core features stored in our memory and get a reasonably coherent picture in our minds. This assembly from individual pieces is at the core of the Hopfield network memory model. Let’s explore it a bit more now.

Hopfield network can also be used to solve some optimization problems like travelling salesman problem, but in this post I will only focus on the memory aspect of it as I find it more interesting. Plus, getting the Hopfield network to solve the optimization problems often requires crafting the weights matrix of the Hopfield network manually, which is often quite laborious and generally not easy.

Hopfield network

Hopfield network is a recurrent neural network which can be used as a content addressable memory (CAM). Recurrent neural networks have the ability to maintain an internal state, which makes them a great choice to model various memory functions. This is not to say that Recurrent ANNs are only useful to model memory! Their ability to keep an internal state (i.e. memorize patterns) makes them useful in a wide range of applications, albeit these are often way more complex than Hopfield networks.

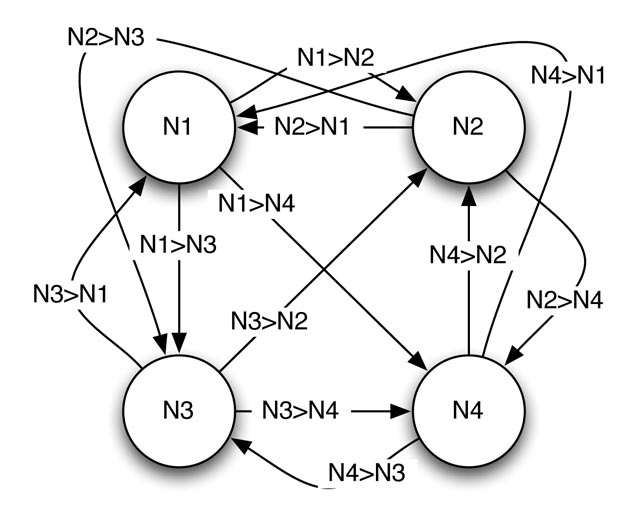

Hopfield network consists of binary neurons: they can only be either on or off (0/1 or +1/-1). The neurons are fully connected to each other in both directions i.e. neuron \(i\) is connected to neuron \(j\) and vice versa. Each connection has a weight assigned to it. The value of the connection weight is the same in both directions between each two neurons e.g. the weight \(w_{ij}\) is the same as \(w_{ji}\). Network weights can be represented by a weights matrix. Each element in particular row and column of the weights matrix represents the connection weight between those particular neurons. Because of the connection symmetry, the weights matrix is symmetric with diagonal elements equal to \(0\), since no neuron is connected to itself.

Just like any other ANN, Hopfield network needs to be trained first before it can be used. Unlike some other neural networks, Hopfield network is trained to store data (patterns). Since the Hopfield network neurons are binary, the network only allows to store binary data. There are several ways to train Hopfield networks, but I only focussed on understanding the two most well known training algorithms: Hebbian and Storkey learning.

Hopfield network training stores the binary data [patterns] by modifying the network weights based on particular learning algorithm. Once the network is trained, i.e. it has stored all the data we have supplied to it during training, we can try to recall any of the stored patterns. Before I shed a bit more light on how this process works let’s talk a bit about network energy which can help us understand how Hopfield networks work.

Energy and pattern basins

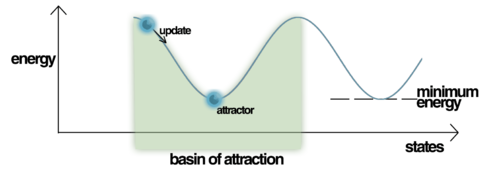

Energy is a scalar number (measure) which helps to explain and quantify the network behavior mathematically at any point of time. When we store a pattern in Hopfield network during training, it becomes an energy attractor. When we try to recall a pattern from the network by presenting it some data pattern, the stored patterns “attract” the presented data towards them. I will talk about the recollection phase later on, for now just remember that during the pattern recollection the energy of the network changes. If you were to plot the network energy landscape, you would notice that the stored patterns create so called basins of attractions to which the newly presented pattern fall in as you try to recall some pattern:

By Mrazvan22 - Own work, CC BY-SA 3.0

What’s really interesting about the energy function is that it is a Lyapunov function. This is a special kind of function which can be used to analyse the stability of non-linear dynamic systems: something I still remember from the control engineering theory I studied at university. For some dynamic systems Lyapunov function provides a sufficient condition of stability. In the context of a Hopfield net, this means that during the pattern recollection the energy of the network is guarnateed to be lowering in value and eventually ends up in one of the stable states represented by the attractor basins. This means that Hopfield network will always recall some pattern stored in it. Unfortunately the attractors represent only local minima so the recalled pattern might not be the correct one. The incorrect patterns are referred to as spurious patterns (spurious minima).

Open questions

One of the questions which popped up in my head when learning about Hopfield networks was whether there is some way how to actually make the attractor basin deeper. Intuitively, the deeper the attractor basin, the higher the attraction is. One of the options could be doing something like presenting the same pattern to the network during the training multiple times. This way you could “manipulate” the memory by attracting more patterns to this particular “memory”. Equally, if my theory does work, there should also be a way how to do the opposite i.e. “confuse” the memory by making the deep basins more shallow by training the network with some highly correlated but different data patterns: apparently Hopfield network don’t like when the patterns you’re trying to store in it are linearly dependent. I’m not sure any of these theories work. I need to do some research and perform some tests on this. I will report back with the results!

Capacity and spurious patterns

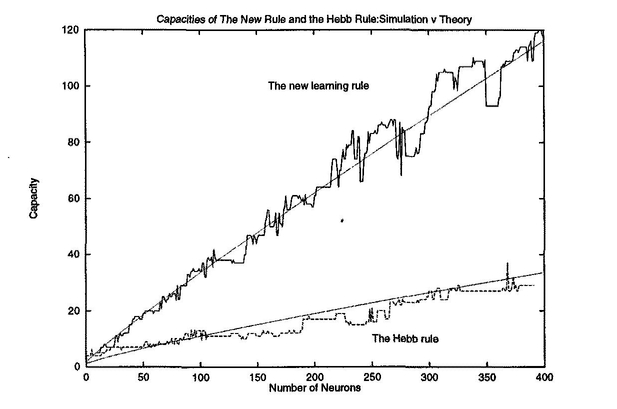

Everything in the nature has its limits and so does the storage of the Hopfield networks. The number of patterns a Hopfield networkcan store is limited by the size of the network and chosen learning algorithm. You can get a rough idea from the graph below

Storkey learning allows to store more patterns than Hebbian learning, however it takes longer to train the network. Hebbian learning on the other hand is simpler and to me personally easier to understand. Either way, if you exceed the network capacity and try to recall a pattern from it, you might experience the spurious patterns I mentioned earlier.

Memory recall

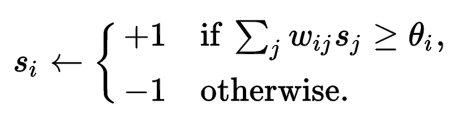

Before we dive into my Go implementation I will shortly describe how the pattern recollection works, because I find it super interesting! During the recall phase the network runs continuously until the states of the neurons in the network settle on some values. Once the energy settles, we are in one of the energy basins. Let’s say we have trained our network using one of the learning algorithms. Now, if we want to recall some pattern we present it to the network. Each neuron \(s_i\) in the network starts switching its state following the formula below:

where \(s_j\) is the state of neuron \(j\), \(w_{ij}\) is the connection weight between neurons \(i\) and \(j\) and \(\theta_{i}\) is some threshold, normally set to \(0\).

We know that the network weights are fixed since they are only modified during training, not during the recall phase. This means that the result of the formula above is driven by the states of the neurons in the network. Notice that a neuron switches its state “on” if majority of the neurons the neuron is connected to are also on. The more neurons are in on state, the higher the chance the state of the neurons \(i\) will switch on, too and vice versa. This seems sort of like a peer pressure. The more of your peers are off, the likelihood you’ll switch off, too is very high. How cool is that? :-)

So how can this be useful? Imagine you are a police department and you have a database of criminals. When a new crime happens you interrogate a witness and put together a rough image of the offender. This is not an exact image as its based on the witness description. Now you digitize it and run it against the Hopfield network trained with the images of suspects in that area at the given time. The result can give you an indication of who could be the offender. The idea can be expanded to other use cases, like remembering the passwords from a database of known passwords, an idea I have played around in my head for some time.

Go implementation

Thanks for making it all the way to the Go implementation! Let’s dive into some code now! Full source code is available on GitHub. The project codebase is pretty simple. It contains a single Go package called hopfield. You cab create a new Hopfield network like this:

n, err := hopfield.NewNetwork(pattern.Len(), "hebbian")

if err != nil {

fmt.Fprintf(os.Stderr, "\nERROR: %s\n", err)

os.Exit(1)

}

NewNetwork function accepts two parameters: the size of the network and type of the training algorithm. The project currently support only the earlier mentioned Hebbian and Storkey algorithms. Now that you have created new network you can start storing some data in it. The data must be encoded as binary vectors since the Hopfield networks can only work with binary data. The Store method accepts a slice of Patterns. Pattern represents the data as binary vectors. You don’t have to craft the Patterns manually: hopfield package provides a simple helper function called Encode which can be used to encode the data into accepted format. The dimension of the data must be the same as the size of the network:

pattern := hopfield.Encode([]float64{0.2, -12.4, 0.0, 3.4})

if err := n.Store([]*hopfield.Pattern{pattern}); err != nil {

fmt.Fprintf(os.Stderr, "\nERROR: %s\n", err)

os.Exit(1)

}

You can store as many patterns in the network as the network capacity allows. Network capacity is defined by both the size of the network and chosen training algorithm. You can query the network capacity or the number of already memorised patterns using the methods shown below:

cap := n.Capacity()

mem := n.Memorised()

Now that you have stored some patterns in the network you can try to recall some patterns from the network using the Restore function. You can specify a mode of the restore (async or sync - Note: restore mode has nothing to do with programming execution) and the number of iterations you want the network to run for:

res, err := n.Restore(pattern, "async", 10)

if err != nil {

fmt.Fprintf(os.Stderr, "\nERROR: %s\n", err)

os.Exit(1)

}

That’s all there is to basics. Feel free to explore the full API on godoc and don’t forget to open a PR or submit an issue on GitHub. Let’s have a look at some practical example.

Recovering MNIST images

Apart from the Hopfield network API implementation, the gopfield project also provides a simple example which demonstrates how the Hopfield networks can be used in practice. Let’s have a quick look at it now! The example stores a couple of MNIST images in the Hopfield network and then tries to restore one of them from a “corrupted” image. Let’s say we will try to “recover” an image of number 4 which has been corrupted with some noise:

The program first reads couple of MNIST images into memory one by one. It then converts them into binary data patterns using a helper function Image2Pattern provided by the project’s API. The images are then stored in the network as vectors which have the same dimension as the number of the image pixels: all the MNIST images from particular set have the same dimension which defines the size of the Hopfield network. Go standard library provides a package that allows to manipulate digital images, include gifs! I figured it would be cool to render a gif which would capture the reconstruction process:

You can see how the network pixels are reconstructed one by one until eventually the network settles on the correct pattern which represents the image we were tryint to restore. We could also plot the energy of the network over the course of recall and we would see that the energy eventually settles on some value which corresponds to one of the local minimas. There is much more to the implementation than what I briefy describe, so feel free to inspect the actual source code on GitHub. This example hopefully gives you at least some visual context about how the Hopfield network recall process works.

Conclusion

Thank you for reading all the way to the end! I hope you enjoyed reading the post and also that you have learnt something new. We talked about Hopfield networks and how they can be used to memorize and later recall some data.

I have also introduced project gopfield which provides an implementation of Hopfield networks in Go programming language. Finally I have illustrated how the project can be used in practice by reconstructing a corrupted MNIST image from the network.

Let me know in the comments if you come up with some cool use of the Hopfield networks or if you know the answers on some of the questions I wondered about in the post.