It all started as a joke. I was in a group chat with a few of my friends and we were talking about football (soccer for the American readers). I entered the chat during a mildly heated discussion about the manager of a team one of my friends supports. It was going on for a bit while with seemingly no end in sight when it occurred to me that I could just as well clone my friends’ voices and pit them against each other by backing them with LLMs, and I’d probably not see much difference in the conversation.

I hacked a small proof of concept that very night in Go it worked surprisingly well, much to my amazement. Though the prototype was a single-bot experiment with no conversational counter-part other than my prompting it via standard input it made me realize how easy it’s become to build conversational bots with actual “voices”. I buried the original — arguably half broken — code in one of my GitHub gists that night with mild satisafaction from accomplishment and moved on with my life.

A few weeks later I started picking up Rust again and I was looking for a project to work on that’d help me practice. I remembered the fun little bot experiment and I figured maybe I could hack on something similar in Rust. In the spirit of the original inspiration from a conversation that led pretty much nowhere, I decided to make things more interesting by pitting a bot written in Go against a bot written in Rust and get them to discuss both programming languages.

That took me down the rabbit hole I hadn’t imagined before I rolled up my sleeves. It was a rabbit hole I’ve thoroughly enjoyed though: I got to learn about advanced Rust concepts, tokio and various concurrency patterns, build API libraries for a remote TTS provider in buth Go and Rust and so much more! This blog post is a short story of the how.

As a small tease, here is a rather rough sample of the conversation generated by the current prototype:

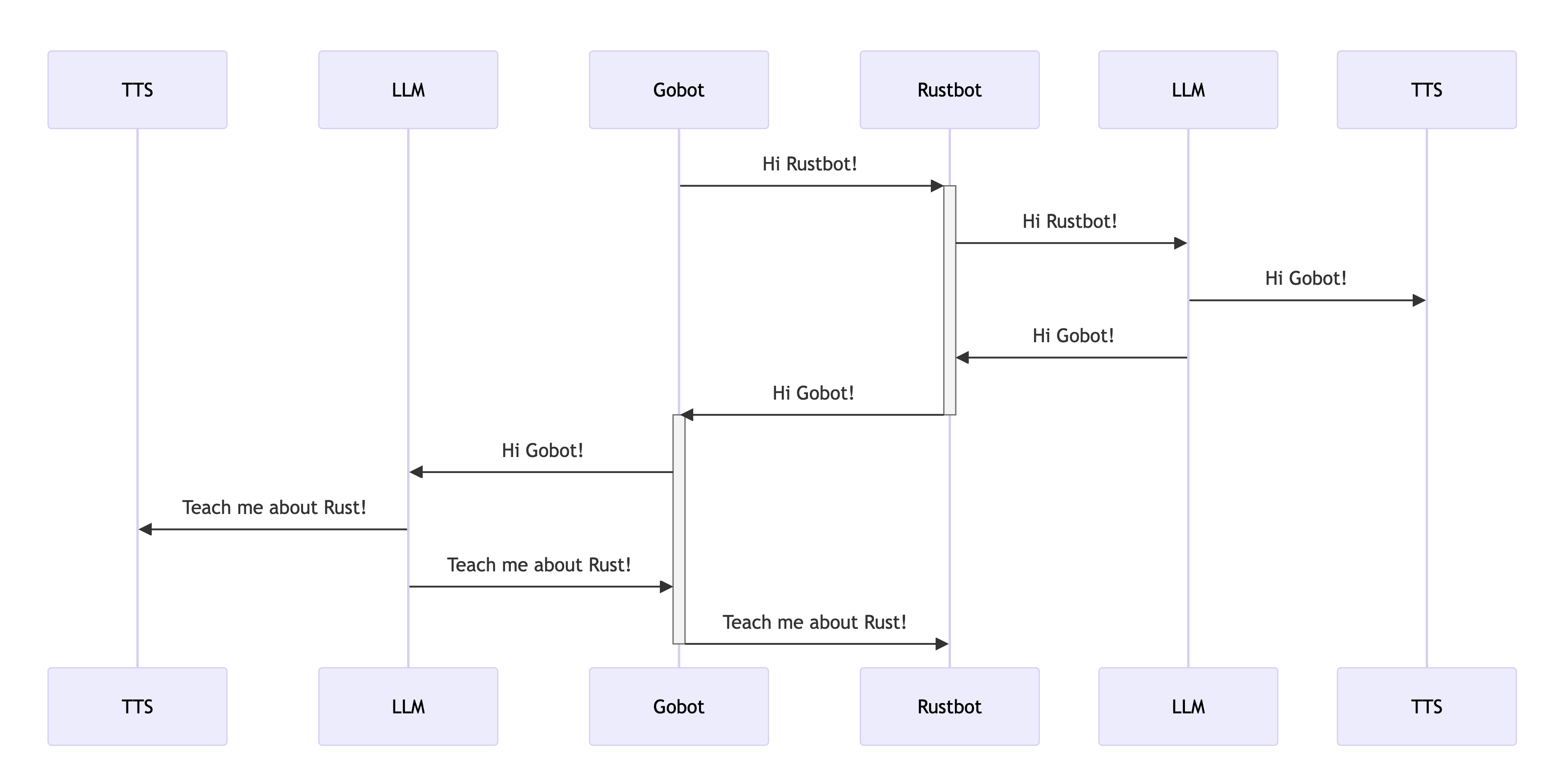

Whenever I start working on something slightly more complicated I tend to draw a lot of diagrams first. Most of them turn out to be useless but they help me crystalise my thinking about a problem at hand.

Usually, I start with something very generic and then work my way through DFS (Depth First Search) down to the implementation details. For this particular project, I needed a few basic components:

- Two bots: one written in Go (

gobot) and the other one written in Rust (rustbot) - LLM - accessible via API the bots could prompt to generate answers

- TTS - accessible via API the bots could use to generate audio

- access to the audio device(s) on the machine the bots would run on

Once I have these I try to model data flows – usually I do both these tasks at once, but for the sake of brevity I mention them as separate steps. This is the rough idea of the conversational flow I had in my mind:

Hopefully the diagram makes the ideas clearer to you, but just in case it doesnt here is a short description of the conversation sequence:

gobotsends a message torustbotrustbotforwards it to an LLM to generate an answer togobot- LLM generates the answer to

gobot’s message - TTS plays the audio of the generated answer

rustbotsends the generated answer togobot- Rinse and repeat ad infinitum (ok, maybe not ad infinitum)

Now, that I had a rough idea about the basic flow in my head I could proceed with thinking about the specifics for each block in the flow diagram. The bots would be written in Go and Rust, that’s a given. But what about the LLM? And the TTS? And how will the bots communicate with each other?

Design Implementation Details

One of the things I wasn’t entirely clear on initially was the message transport [between the bots]. The few options I considered were:

- REST API: each bot would expose an API endpoint used for accepting responses

- WebSockets: bots would stream the messages between each other via WebSockets

- Message bus: bots would publish/subscribe to dedicated message topics/subjects

I didn’t like the idea of the REST API endpoint; not being able to replay bot messages was a no-go (yes I could record them and replay them afterwards but the machinery that would enable that was rather offputting). I drew similar conclusions about the WebSocket (WS) option, which had another drawback: maintaining WS connections between two endpoints kinda sucks — been there done that, nah thanks. Not to mention figuring out a communication protocol between the bots given the WS only give you a raw TCP stream, etc. So I settled on the last option whose pros outweighed – at least in my head – many of the discussed cons. Plus it required very little effort on my part:

- Message routing: not having to deal with implementation details of message routing would be a huge win

- Message replay: handy for replaying bot conversations; indispensable for debugging

- Battle tested communication protocol: I had no interest in inventing another one for a side project

- Client libraries: not having to write client libraries again would be a huge win and a time saver

Message Bus

As for the actual message bus I decided to go with one of my favourite kits out there: NATS JetStream. I’ve always said NATS is extremely underrated in the world ruled by Kafka and the like. I’ve been a big fan of it from very early days and used it in a few of my side projects. NATS has decent client library support for various programming languages and pretty extensive documentation. Not to mention all the great educational resources Jeremy Saenz has been putting out on Synadia’s YouTube channel.

NOTE: I have no affiliation with Synadia, I just really like the product they develop

LLM

As for the LLM, I decided to go with ollama running llama2

(this was at the time llama3 was not yet released), though I have made the model configurable so

you can pick any model ollama can run. The important thing for me was having a stable API I could interact

with from the bots.

I could just as well use one of the paid LLM offerings like OpenAI or Anthropic’s Claude etc., but I didn’t want to spend my tokens on a silly side project during the initial development phase: Ollama makes LLMs accessible on your machine via a simple API. And as an added bonus, there are some nice API client libraries I could use!

TTS

One last piece of the puzzle was the audio synthesis aka TTS (Text To Speech). In my original experiment I used Elevenlabs who provide very high-quality voices but unfortunately are prohibitively expensive for hobbyist hackers. By the same token, OpenAI also offers a TTS API but as with Elevenlabs, it felt rather expensive for a hobby project.

At my $DAY_JOB we had great luck with PlayHT who do have decent pricing options for hackers and some handy features like single-shot voice cloning, etc.

NOTE: I have no affiliation with PlayHT.

Now, the last remaining issue was the lack of client libraries for the PlayHT API. Most of the companies build their AI agents in Python or JS/TypeScript so naturally PlayHT offer Python and Node.js SDKs.

Unfortunately, I needed Go and Rust libraries, so I had to roll my own, which was another fun endeavour which let me learn about the PlayHT API, speech synthesis, as well as Rust itself. You can find them on GitHub

- Go Playht module: go-playht

- Rust library: playht_rs is also available as a Rust crate

Both repos contain a lot of examples which demonstrate how to use the client libraries. Say you want to create some audio narrated by your voice. You can record a small audio sample and then use the single-shot clone API endpoint to narrate arbitrary text. Here’s how you’d create a clone of your voice in Go:

package main

import (

"context"

"flag"

"log"

"github.com/milosgajdos/go-playht"

)

var (

input string

mimeType string

)

func init() {

flag.StringVar(&input, "input", "", "input voice sample")

flag.StringVar(&mimeType, "mime-type", "", "input MIME type")

}

func main() {

flag.Parse()

// Creates an API client with default options.

// * it reads PLAYHT_SECRET_KEY and PLAYHT_USER_ID env vars

// * uses playht.BaserURL and APIv2

client := playht.NewClient()

if input != "" {

if mimeType == "" {

log.Fatal("must specify input MIME type")

}

req := &playht.CloneVoiceFileRequest{

SampleFile: input,

VoiceName: "my-voice",

MimeType: mimeType,

}

cloneResp, err := client.CreateInstantVoiceCloneFromFile(context.Background(), req)

if err != nil {

log.Fatalf("failed to clone voice from file: %v", err)

}

log.Printf("clone voice response: %v", cloneResp)

}

If you now wanted to generate an audio using this cloned voice in Rust here’s one way how you could go about it:

use playht_rs::{

api::{self, stream::TTSStreamReq, tts::Quality},

prelude::*,

};

use tokio::{fs::File, io::BufWriter};

#[tokio::main]

async fn main() -> Result<()> {

let mut args = std::env::args().skip(1);

let file_path = args.next().unwrap();

let voice_id = args.next().unwrap();

let client = api::Client::new();

let req = TTSStreamReq {

text: Some("What is life?".to_owned()),

voice: Some(voice_id),

quality: Some(Quality::Low),

speed: Some(1.0),

sample_rate: Some(24000),

..Default::default()

};

let file = File::create(file_path.clone()).await?;

let mut w = BufWriter::new(file);

client.write_audio_stream(&mut w, &req).await?;

println!("Done writing into {}", file_path);

Ok(())

}

As I said, there are plenty more example in the actual GitHub repositories. So go check them out!

Having settled on using Ollama for LLM, PlayHT API for TTS and NATS for message routing the architecture diagram of when zooming in on one part of the conversation looks like this:

With all the basics resolved I could finally proceed with building the bots!

I’ve structured the code for both bots in a similar way so it’d be easier to find my way around in both language codebases. Equally, I figured maybe there are some Go developers who are thinking of learningRust and who might find it easier to understand the Rust code if it’s structured in a similar way to the Go code and vice versa. I’ll leave that to you to judge, though I shall warn you the project itself is just a fun experiment so don’t hold your breath when looking at the code!

gobot

I love Go. In spite of all its quirks and footguns that make me go through the full scale of emotions every once in a while. After having been programming in Go for what must be a decade now I feel quite comfortable with it, so hacking on the Go side of this project was a breeze. More or less.

From a very high-level point of view, the bot manages a group of worker goroutines each of which is responsible for a specific task:

llm: prompts the LLM and dispatches the generated answers to thettsand JetStream writer goroutines (more on this below)tts: streams the audio from the PlayHT API into the default audio device- goroutines handling JetStream tasks:

reader: subscribes to a JetStream subject and forwards whatever it receives on it to thellmgoroutine (these are the actual messages received from rustbot sent to ollama as prompts)writer: listens to messages sent to it via thellmgoroutine and publishes them to a JetStream topic which is subscribed to byrustbot

NOTE: there is also a goroutine that traps an interrupt signal and notifies all the other goroutines so they stop doing whatever they’re doing before the program exits.

You can find the full code on GitHub but here’s a small code snippet that enables this:

// chunks for TTS stream

ttsChunks := make(chan []byte, 100)

// chunk for JetStream

jetChunks := make(chan []byte, 100)

// LLM prompts

prompts := make(chan string)

// ttsDone for signalling we're done talking

ttsDone := make(chan struct{})

g, ctx := errgroup.WithContext(ctx)

log.Println("launching gobot workers")

g.Go(func() error {

return tts.Stream(ctx, pipeWriter, ttsChunks, ttsDone)

})

g.Go(func() error {

return llm.Stream(ctx, prompts, jetChunks, ttsChunks)

})

g.Go(func() error {

return jet.Reader.Read(ctx, prompts)

})

g.Go(func() error {

return jet.Writer.Write(ctx, jetChunks, ttsDone)

})

There are a couple of things I wanna point out. I use the errgroup Go module to manage the goroutine cancellations and exits:

Wait blocks until all function calls from the Go method have returned, then returns the first non-nil error (if any) from them.

I also want to stress that gobot is the initiator of the conversation — it’s the bot that must be prompted

before the conversation can proceed to the next stage. I do that by reading standard input and smacking the

read in non-empty string into the Go channel monitored by the llm worker.

Here’s a simple diagram of how the individual goroutines communicate with each other via channels:

And here’s what’s going on in the diagram:

jet.Readerreceives a message published on a JetStream subjectjet.Readersends this message to thepromptschannelllmworker reads the messages sent to thepromptschannel and forwards them to ollama for LLM generation- ollama generates the response and the

llmworker sends it to bothttsChunksandjetChunkschannels ttsworker reads the message and sends the message to PlayHT API and streams the audio to the default audio device;- once the playback has finished

ttsworker notifiesjet.Writervia thettsDonechannel that it’s done playing audio jet.Writerreceives the notification on thettsDonechannel and publishes the message it received onjetChunkschannel to a JetStream subject

That’s a very high-level flow. Hopefully the description makes it a bit clearer about what’s going on but if you prefer the code head over to the GitHub repository.

The code for the individual workers turned out to be much simpler than I had originally expected.

One thing I had to hack together was something I decided to call FixedSizedBuffer: it is a bytes buffer

with a fixed size. This made flushing the data streamed from LLM as chunks (slices) of bytes easier to deal

with before sending it over to PlayHT API. If the buffer fills up, it returns a specific error to notify

the writer so it can flush the data and reset the buffer so it can continue to be written to. Here’s the code:

// NewFixedSizeBuffer creates a new FixedSizeBuffer with the given max size.

func NewFixedSizeBuffer(maxSize int) *Buffer {

b := make([]byte, 0, maxSize)

return &Buffer{

buffer: bytes.NewBuffer(b),

maxSize: maxSize,

}

}

// Write appends data to the buffer.

// It returns error if the buffer exceeds its maximum size.

func (fb *Buffer) Write(p []byte) (int, error) {

available := fb.buffer.Available()

if available == 0 {

return 0, ErrBufferFull

}

if len(p) > available {

p = p[:available]

}

n, err := fb.buffer.Write(p)

if err != nil {

return n, err

}

if fb.buffer.Len() == fb.maxSize {

return n, ErrBufferFull

}

return n, nil

}

// Reset resets the buffer

func (fb *Buffer) Reset() {

fb.buffer.Reset()

}

// String returns the contents of the buffer as a string.

func (fb *Buffer) String() string {

return fb.buffer.String()

}

Now, I want to stress that we “flush” the buffer data to TTS API as strings by using the (*Buffer).String() method

which should ring all alarms in experienced Go programmer’s head as it simply spits out a string from the bytes

stored in the internal buffer at the point of flushing: these bytes might not necessarily form correct UTF-8 encoded

string so this method might produce a nonsensical Go string if the size of the buffer is so small that it splits up

the UTF-8 encoded string; so beware of that and tune it accordingly!

In my experience the chunks streamed by Ollama back to the client have always been valid strings, so it wasn’t

an issue, but it might become for non-English languages. YMMV! An alternative solution could be to just assemble

the whole generated message streamed from LLM first and send that to the appropriate channels instead of chunking.

We could also use the unicode/utf8 package from Go standard library to decode the bytes into UTF-8 encoded string and

if the decoding failed, we could either report it as an error or maybe allow a small buffer overflow and push it into buffer.

Another tricky thing was getting the audio playback working on the default audio device on macOS.

This was arguably one of the things I spent banging my head against the desk the longest. Eventually,

I settled on using the github.com/gopxl/beep Go module which did the trick,

but I still need to figure out how to run the playback in a goroutine so I can pipe data to it through io.Pipe.

The way it works now is as follows:

// create an io.Pipe for streaming audio

pipeReader, pipeWriter := io.Pipe()

...

...

// pass the pipeWriter into tts worker

g.Go(func() error {

return tts.Stream(ctx, pipeWriter, ttsChunks, ttsDone)

})

...

...

// stream the MP3 encoded data into the audio player

streamer, format, err := mp3.Decode(pipeReader)

if err != nil {

log.Printf("failed to initialize MP3 decoder: %v\n", err)

}

defer streamer.Close()

if err := speaker.Init(format.SampleRate, format.SampleRate.N(time.Second/10)); err != nil {

log.Printf("Failed to initialize speaker: :%v\n", err)

}

speaker.Play(beep.Seq(streamer, beep.Callback(func() {

<-ctx.Done()

})))

When I tried playing the audio in a goroutine I was seeing the io.PipeReader part of the pipe getting closed down

for some reason. Locking the playback goroutine to the main thread did not seem to fix the issue, but running the player

in a blocking mode in the main goroutine worked like a charm. I need to look into that more closely, but at this point

the playback was working so I was happy to move on from these macOS audio woes to more interesting things.

There are a couple of more things I’d like to point out about the Go implementation. The llm worker actually uses

the github.com/tmc/langchaingo Go module rather than the ollama’s Go module.

I felt it was a bit nicer to work with and also thought of potentially tapping into some of the more advanced prompting

techniques in the future if I wanted to. At the moment the prompting side of things in either of the bots is

extremely rudimentary: there is a simple seed prompt passed in as via cli that gives the LLM some basic instructions.

You can find it here in the GitHub repo here.

Let’s move on to rustbot now!

rustbot

The high-level design of the Rust counterpart of this experiment is pretty much the same. We spawn a bunch of tokio tasks that work to fulfil the same goals as the gobot’s goroutines. The main difference at least when it comes to communication between the spawned tasks is that Rust does not have a bidirectional channels. As I mentioned in one of my previous blog posts Rust channels have two distinct ends: one for reading and the one one for writing. The code of the main function looks something like this:

...

...

let (prompts_tx, prompts_rx) = mpsc::channel::<String>(32);

let (jet_chunks_tx, jet_chunks_rx) = mpsc::channel::<Bytes>(32);

let (tts_chunks_tx, tts_chunks_rx) = mpsc::channel::<Bytes>(32);

let (aud_done_tx, aud_done_rx) = watch::channel(false);

// NOTE: used for cancellation when SIGINT is trapped.

let (watch_tx, watch_rx) = watch::channel(false);

let jet_wr_watch_rx = watch_rx.clone();

let jet_rd_watch_rx = watch_rx.clone();

let tts_watch_rx = watch_rx.clone();

let aud_watch_rx = watch_rx.clone();

println!("launching workers");

let (_stream, stream_handle) = OutputStream::try_default().unwrap();

let sink = Sink::try_new(&stream_handle).unwrap();

let (audio_wr, audio_rd) = io::duplex(1024);

let tts_stream = tokio::spawn(t.stream(audio_wr, tts_chunks_rx, tts_watch_rx));

let llm_stream = tokio::spawn(l.stream(prompts_rx, jet_chunks_tx, tts_chunks_tx, watch_rx));

let jet_write = tokio::spawn(s.writer.write(jet_chunks_rx, aud_done_rx, jet_wr_watch_rx));

let jet_read = tokio::spawn(s.reader.read(prompts_tx, jet_rd_watch_rx));

let audio_task = tokio::spawn(audio::play(audio_rd, sink, aud_done_tx, aud_watch_rx));

let sig_handler = tokio::spawn(signal::trap(watch_tx));

...

...

Probably the most notable difference in comparison to the gobot implementation, other than the two ended channels,

is that we are spawning a dedicated task for audio playback — this works like a charm using the rodio crate,

though the actual playback handling code is a bit more elaborate than in the Go implementation since

rodio is a much lower level library than the beep Go module I used in gobot.

NOTE: beep module actually builds on top of ebitengine’s oto module

which packs the lower-level multiplatform implementation of sound playback leveraged by beep.

Another notable thing, especially for the Go developers, is the cloning of the channel that notifies the spawn tasks

that they need to exit. I wrote about different cancellation patterns in one of my previous blog posts

and this is one of the real life usecases — there might be a better way to do this but I’m still a Rust n00b,

so this did the trick for me. The sending end of the channel is passed to the signal::trap function

which handles the OS interrupt signal (fired by OS when you press Ctrl+C) and notifies all the other spawned tasks

so they can clean up before they exit.

In Go you’d usually create a single channel and pass it down to individual goroutines, though there are some

other techniques [and patterns] such as context cancellation which is what I use in the gobot code:

I create a shared context and then pass it into the errgroup and all the goroutines;

the goroutines listen on the cancellation channel via select statement.

Here’s a small code sample:

...

...

ctx := context.Background()

// we will cancel this context when we trap interrupt signal

ctx, cancel := context.WithCancel(ctx)

sigTrap := make(chan os.Signal, 1)

signal.Notify(sigTrap, os.Interrupt)

defer func() {

signal.Stop(sigTrap)

// NOTE: multiple calls to cancel don't

// cause any issues in Go

cancel()

}()

go func() {

<-sigTrap

log.Println("shutting down: received SIGINT...")

cancel()

}()

...

...

// Create waitgroup with context that may be cancelled

g, ctx := errgroup.WithContext(ctx)

...

...

// example code that waits for the cancellation

select {

case <-ctx.Done():

return ctx.Err()

}

...

...

tokio has an “equivalent” of the Go’s ergroup.Wait function called try_join!.

That’s actually a Rust macro (hence the !). The concrete implementation is a bit

different but conceptually they both work in similar way:

Wait on multiple concurrent branches, returning when all branches complete with

Ok(_)or on the firstErr(_).

Just like in the Go implementation, you will also find an implementation of the fixed bytes buffer in the rustbot code. It works the same way as in gobot. Here’s the code:

use bytes::{BufMut, Bytes, BytesMut};

use std::error::Error;

use std::fmt;

#[derive(Debug)]

pub struct BufferFullError {

pub bytes_written: usize,

}

impl fmt::Display for BufferFullError {

fn fmt(&self, f: &mut fmt::Formatter) -> fmt::Result {

write!(f, "buffer is full, {} bytes written", self.bytes_written)

}

}

impl Error for BufferFullError {}

pub struct Buffer {

buffer: BytesMut,

max_size: usize,

}

impl Buffer {

pub fn new(max_size: usize) -> Self {

Buffer {

buffer: BytesMut::with_capacity(max_size),

max_size,

}

}

pub fn write(&mut self, data: &[u8]) -> Result<usize, BufferFullError> {

let available = self.max_size - self.buffer.len();

let write_len = std::cmp::min(data.len(), available);

self.buffer.put_slice(&data[..write_len]);

if self.buffer.len() == self.max_size {

return Err(BufferFullError {

bytes_written: write_len,

});

}

Ok(write_len)

}

pub fn reset(&mut self) {

self.buffer.clear();

}

pub fn as_bytes(&self) -> Bytes {

self.buffer.clone().freeze()

}

}

The only difference is that instead of having a function that returns the String I’ve opted to return

the underlying bytes and then decode them as UTF-8 String on the consumer side. The code that does it looks like this:

let msg = String::from_utf8(b.to_vec())?;

As I mentioned earlier we could do the same thing in the Go codebase using the unicode/utf8 package.

I use the ollama-rs crate for communicating with ollama and the already mentioned playht_rs made by yours truly to talk to the PlayHT API. Everything else maps more or less the same way to how things are done in gobot.

And that’s pretty much it, folks! All that remains to be done is taking the bots for a spin. You can find the instructions in the GitHub repo README. Mind you, things can be a bit hairy and bug-ridden at the moment, so feel free to open a PR if you come across something and fancy fixing it.

There is an awful lot more I could carry on talking about, but I think it’s probably better if you go and see the actual code and play with it. There is nothing like reading through actual implementation than talking about it, so I will leave it at that.

Conclusion

This project has come into existence over the past couple of weeks of hacking late nights and early mornings. At times I felt like I was building a plane during take-off – especially the Rust code parts which I was literally picking up along the way. It’s been a tremendous fun experience I’ve really enjoyed. It made me appreciate both Go and Rust in different ways as similar yet so different programming languages. I was forced to build API client libraries for a TTS provider which made me understand and appreciate the work that goes into audio streaming and audio-based applications. I hope you find at least some of this code useful. Take it, modify it. Just go, build and give your own bots a voice!