This summer I spent quite a bit of time speaking to various people about “intelligent” Edge computing. I put double quotes around the word intelligent to avoid the wrath of the thought leaders on the internet as they fight each other over what intelligence is and what is not.

The more I talked to people the more I was realizing the possibilities and opportunities the edge computing opens up for the future: “injecting” intelligence into dumb physical objects seems akin to injecting “life” to dead objects, at least for us, scifi fans, which makes up for interesting vision of the future (for brevity let’s ignore the IoT security issues in this post). The possibilities are even more exciting in light of machine learning and neural networks models getting lighter and more easily deployable in the physical space. And let’s not forget about all the efforts to bring intelligence into some variation of IoT blockchain.

I decided to take a closer look into this so I started looking around for what options are there available for a random hacker who wants to get their hands dirty by building something as fast as possible. One of the things that grabbed my attention was Intel Movidius Neural Compute Stick (NCS). I’ve been aware of it for a while but never really had a chance or proper interest in it until recently. NCS is a small USB powered device which packs Myriad 2 VPU in it and which allows for much faster Neural Network inference] than traditional CPU.

It’s no secret I’ve been enjoying hacking on Go for a while now, so naturally I searched for NCS Go libraries. To my surprise the available options to Go programmers were rather limited. There is a Go package by the awesome folks from Hybridgroup, but it doesnt provide full NCSDK coverage. However, it does offer just enough API coverage to get your hands dirty hacking if you dont need anything too complex. And so I did! But before I get into details, let’s talk about the development environment setup.

Intel Movidius NCS and macOS setup

Besides the lack of Go library options, another thing which kind of annoyed me, being a macOS user, was that there was no support to compile the NCSDK on macOS. If I wanted to use the Hybridgroup Go package I wouldnt be able to use it on my laptop, so I looked into whether I could hack around it somehow. It turns out it wasn’t too hard to get the API V1 libraries compiled and installed on macOS (High Sierra), so I opened a PR which was merged in and which hopefully made life easier for the macOS folks who attended Gophercon 2018 NCS workshop run by Ron Evans from Hybridgroup. You can find the instructions about how to set up the API V1 on your macOS in my GitHub fork.

After hacking a few examples I got my eyes on API V2 which offered significant improvements over the V1 API, such as inference queues, multiple graphs (we’ll talk about what graph is later) etc. Once again I spent a bit of time trying to figure out how to get the V2 NCSDK working on macOS so I wouldnt have to use vagrant to boot up Linux VM – unfortunately, to my knowledge, Docker on Mac does not provide any easy way for USB pasthrough, so Docker was off the table and vagrant was the only option to go with. Whilst I did manage to compile and install the API V2 libraries on macOS (High Sierra) (you can find the API V2 macOS fork here) the communication with the NCS device just wasnt working at all. After a bit of debugging I got really annoyed and booted a Linux VM (the Vagrantfile I’m using can be find in my GitHub gist).

Once I got my setup working and the communication between my laptop USB port and the VM running in Virtualbox was working I could finally focus on hacking on Go bindings for the V2 NCSDK. But before I talk about the Go NCS package, I want to quickly discuss how the NCS device works.

Intel Movidius NCS concepts

There are several concepts you might want to understand before you start hacking on NCS. As I mentioned earlier, NCS device packs Myriad 2 VPU which handles the machine learning model inference execution. The VPU is powered via USB which also serves as a transport layer for transferring the machine learning model onto the device where the VPU can do its thing. The NCSDK provides libraries that allows you to do both the transfer of the model to the device as well as sending the data for the inference and requesting the inference results from VPU once the model is on the device.

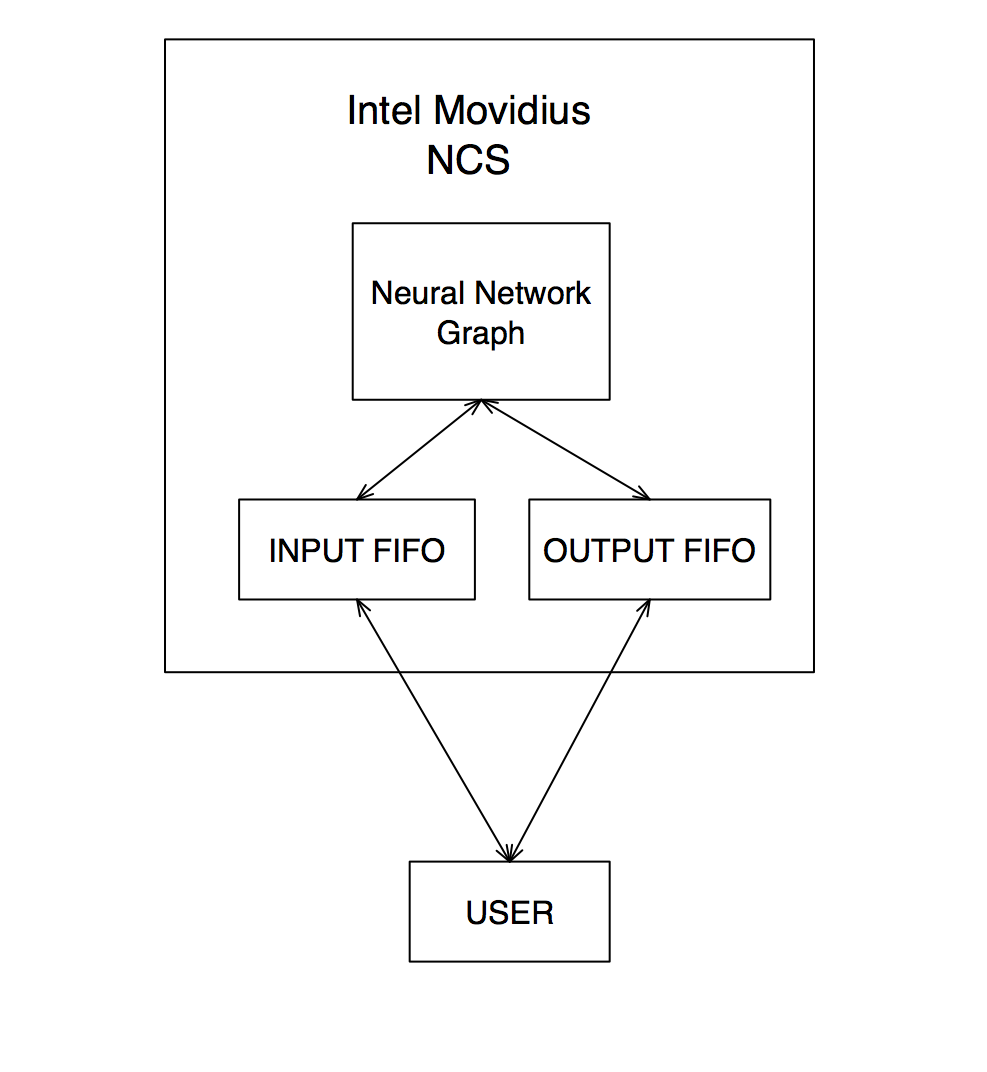

Machine learning models which can be loaded into the NCS need to be compiled into a predefined data format blob details of which I could not find anywhere, but lets ignore that for now and lets just remember that the compiled model data blob is called NCS Graph. So how does one create the NCS Graph? Movidius Intel NCSDK provides some semi-functional toolkit – prepare to be frustrated at times – whcih allows you to grab TensorFlow or caffee models and compile them into the NCS graph which can then be loaded onto the NCS.

Once the graph has been compiled and loaded on the device you can use it for inference. In order to do that you need to allocate [at least] two unidirectional FIFO queues which provide and interface for communication with the NCS device through which you submit the data for inference and pick up the results once they’ve been computed. That’s the high level overview how the things work on NCS device. There are a lot of cool things you can do using the NCSDK, such as monitor the inference performance or even the temperature of the device itself etc. Jus read the official documentation for more details.

Now that you have a slightly better idea how things work, let’s move on to talk about the Go bindings.

Intel Movidius NCS Go bindings

With a bit of occasional help from Ron Evans I spent a few weeks in summer hacking on the API V2 NCSDK Go bindings. Both mine and Ron’s Go package use cgo which allows to bind into the existing C/C++ NCSDK libraries. Of course I would much rather prefer memory safety of native Go bindings, but I didnt have much spare time to read through the heaps of C/C++ code that is shipped along the NCSDK libraries. As I was hacking throuh I encountered all kinds of random bugs which made me refresh my knowledge of gdb :-) and learnt loads about cgo and Movidius NCS. Eventually I got most of the NCSDK API V2 covered in Go package which is available on my GitHub repo.

You can start hacking on it straight away, of course once you have the VM machine setup as I discussed earlier. My workstation is runnin macOS High Sierra on MacBook Pro, Late 2016, which only ships with USB-C ports, however Intel Movidius NCS only offers USB 2.0 interface so you will have to buy one of those annoying dongles to get the NCS connected to macOS before you’re ready to hack. Once you are ready you can try connection to the NCS by using the following code:

log.Printf("Attempting to create NCS device handle")

dev, err := ncs.NewDevice(0)

if err != nil {

return

}

defer dev.Destroy()

log.Printf("NCS device handle successfully created")

log.Printf("Attempting to open NCS device")

if err = dev.Open(); err != nil {

return

}

defer dev.Close()

log.Printf("NCS device successfully opened")

log.Printf("Attempting to create NCS graph handle")

graph, err := ncs.NewGraph("NCSGraph")

if err != nil {

return

}

defer graph.Destroy()

log.Printf("NCS graph handle successfully created")

log.Printf("Attempting to create NCS FIFO handle")

fifo, err := ncs.NewFifo("TestFIFO", ncs.FifoHostRO)

defer fifo.Destroy()

if err != nil {

return

}

log.Printf("NCS FIFO handle successfully created")

}

This code does not do much: it opens a connection to the Intel Movidius NCS device, allocates a NCS Graph handle (it does not load the actual graph onto the device!) and finally it creates a NCS FIFO queue. If everything goes fine, the program cleans up after itself by closing all opened handles and exits. Check out the godoc for the Go coverage.

Movidius Neural Networks magic

Once you get past the basics, you can start doing some really cool stuff especially with the abundance of the pretrained neural networks available on the internet. I hacked a bunch of examples you can find in the project repository, so do check them out if you need some inspiration or if things are still not quite clear for you or if you just want to get started on something more advanced.

I’m not going to talk about the code too much, I just want to give you a bit of an idea what you can already do. One of the examples in the project repo shows how to use SSD Mobilenet for detecting the objects in the images. Mobilnets are pretty tiny neural networks which are suitable and target for smaller devices such as Raspberry Pi or whatnot. The mAPs are not as high as the big chunky Neural network beasts provide as the number of neural network parameters is significantly smaller, but these models provides for faster inference times and lower power consumption. In fact the mAP are getting better so these tiny neural networks are already good enough to do cool stuff like this:

The above image was produced by using Mobilenet-SSD loaded onto NCS. Now, the NCSDK V2 allows to use more than one device each of which can have different graphs and different queues. This is where it gets really interesting. You can combine the object detection networks with another network loaded on another device plugged into your laptop and thus combine the results and do some really interesting and useful things . Let’s say you detect a bunch of dogs in some picture or video. You can then pass the results to a neural network which identifies a particular dog breed and send the result somewhere.

One of the funnier things I hacked at home is, I used a facenet to recognize my face and emotions, then connected it to another crufty Go library I hacked a while ago which automatically plays a different song based on my detected emotion. It’s just a toy example, and obviously I get it’s kinda creepy, but it was a fun thing to connect neural networks with Spotify or Slack API. The alertify package allows to send messages on Slack too, so you can let people know how you’re feeling without even typing it: probably not the best idea, but I wanted to mention the creepy future of office work which surely and sadly awaits us.

Conclusion

I hope this post gave you a bit of an idea about how to use Intel Movidius NCS with Go and are ready to hack some cool stuff. Do check out the project repo and dont be afraid to open an issue or a PR with anything. There is so much more I havent covered in the post such as video processing using the NCS and gocv computer vision library or many other cool things you can do. If you want to learn some basics you can check out some examples in in one of my GitHub repos which cover the basics of GoCV: it would be awesome if you could contribute with new new lessons which can make it easier for the GO community to hack on computer vision.

Before I finish this blog post, I want to give you heads up that working with NCS is not as easy as it seems: be aware that for whatever reason Intel have taken off the source code of ther NCSDK from the official repo, but the precompiled libraries and toolkit are still available for download. Hopefully, though, if you love hacking on Go and are not afraid to tackle hard problems and overcome hurdles NCS and Go can provide you with a platform to build some really cool things.

With the dawn of edge computing I can’t wait to get my hadns on Edge TPU if I ever manage to get hold of any any time soon in Europe. Having a native TensorFlow support on the device will open even more opportunities for hackers around the world who dont have much money to spend on crazy expensive GPUs and/or who can’t afford the power consumption of heavy inference devices in the physical world. I’m super excited about the future and what the tremendous number of hackers will build over the next coming years.