Over the past few months, I have been rekindling my interest in graph theory. I’m not quite sure what has caused this shift in my mind as I didn’t play with graphs too much since I graduated many moons ago, besides the occasional interview preparation. Maybe it was my reading about all the new graph databases that have come to existence over the past few years, or maybe it was my interest in the Graph Neural Networks which also seem to have (re)gained a lot of attention in the research community or maybe, stimulated by the recent coronavirus outbreak, it was the result of my random thinking of the ever so increasingly interconnected nature of our world.

I can’t pinpoint it to any single event, but there I was thinking about the world in terms of graphs and networks like a lunatic as one of my good friends Álex González could tell when during one of our occasional catch-ups I blurted at him:

Alex, graphs are everywhere!

The truth is, graph theory is an incredible tool for modeling many things in the real world and I believe more and more people will start realizing that, soon. The Covid-19 outbreak amplified the utility of graphs and networks be it for the simulation of the outbreaks, the interactions between the mutating virus(es) and genes, or the general supplier networks.

Most recently, Stephen Wolfram introduced a new theory of physics which seems to be fundamentally based on graphs. Roam Research has been enjoying a lot of success in the consumer product space whilst a lot of forward-thinking enterprises have been steadily investing in the building and improving their knowledge graphs.

Unfortunately, I have not had the luck of being involved in any of these amazing efforts. Recently, my professional life has been mostly spinning around Kubernetes and the badly needed developer productivity tooling. The Covid-19 outbreak left me with a bit of extra time on my hands which I decided to invest in building a project which would have graphs at its core. It was the classic “solution looking for a problem” kind of situation, but I was fine with that because I figured it would still lead me to learn something new which is always great! I wanted to create a project which would combine both Kubernetes and graph theory so I eventually came up with an idea for a tool that would allow building the graph of Kubernetes API objects. The size of the clusters I’ve been working recently for some of my clients and I’ve been wondering about the interaction between the API objects in general. Projecting the API objects into some graph could help me understand it better. Or so I thought!

This blog post introduces the project and describes both how I went about building it and why and how it could be useful to people, in particular, the ones working on the intersection of infrastructure and cybersecurity. Nuff’ talk, let’s get started!

Kraph: API object graph Go module

When I started working on the project a few weeks ago, I had a very vague idea about the end result. I realized I needed to give it a proper “think” if the project were to become useful to me [or anyone else]. I knew I wanted to build it in one of my favorite programming languages, Go; this would also have a nice practical side-effect as Kubernetes itself is written in Go and thus provides a wealth of Go packages to interact with its API.

I also knew I needed a reasonably stable (well maintained) graph package as I wanted to focus on the higher-level problem – building the API object graph – as opposed to building the low-level graph primitives. I’ve been a huge fan of the gonum project which provides a rich set of well designed Go packages for scientific computing, including the package for working with graphs. There are probably a lot of other Go packages for working with graphs, but my love affair with gonum goes way back. I used it for building a lot of other personal projects of mine like the Self-organising Maps, Hopfield Networks or most recently the Statistical estimation module which provides an implementation of various statistical filtering algorithms like particle and Kalman filters.

The gonum’s graph package provides a rich set of features that pretty much covered all of my requirements for building and analyzing the graphs. This includes the dedicated package for analyzing [graph] networks which would be incredibly useful for analyzing dynamic relations between the API objects (such as Kubernetes services, service accounts etc.). As a bonus, the project also provides various graph encoders that allow encoding the graphs in different formats such as the famous GraphViz DOT package or the GrahQL encoder.

Now that I picked the graph package, the second step was designing a simple module API that would allow for the use-cases I had in mind. As I was thinking through the design it occurred to me that the project could find a much wider use than just building the graph of the Kubernetes API objects; I figured it might be useful to turn the project into a “generic” API object graph builder with pluggable graph building/scraping modules, of which the Kubernetes API would be just one of many packages. Here is the design I settled on after a few experiments.

At the heart of the project there are two Go packages which define the core Go interfaces:

- api: defines the

Gointerfaces for [mapping of] the API resources and objects - store: defines the

Gointerfaces for storing and querying of the mapped API objects

Let’s have a closer look at each of the packages in a little bit more detail.

api package

At the core of the api Go package there are two interfaces:

Resource: defines an API [group] resourceObject: is an instance of the API resource

You can see the API of each of these below:

// Resource is an API resource

type Resource interface {

// Name returns resource name

Name() string

// Kind returns resource kind

Kind() string

// Group retrurns resource group

Group() string

// Version returns resource version

Version() string

// Namespaced returns true if the resource is namespaced

Namespaced() bool

}

// Object is an instance of a Resource

type Object interface {

// UID is object uid

UID() UID

// Name is object name

Name() string

// Kind is Object kkind

Kind() string

// Namespace is object namespace

Namespace() string

// Links returns all object links

Links() []Link

}

Notice the Links function which tracks the links (i.e. relations) between the objects, allowing for building the object graph.

It’s hard to deny the design has been inspired/driven by the Kubernetes API design to some extent. Kubernetes proved to be an excellent learning “playground” as its API provides various versioned groups of API resources. There can be multiple instances (objects) of a particular API resource group at any time. You could see a similar pattern in AWS API where you have for example different generations of EC2 instances each accessible via different API versions.

Besides the two core api package interfaces I realized I also needed some simple pluggable interfaces that would allow for scanning of the remote APIs and mapping the objects and their links which would lead to building the API object graph. I settled on a simple Client interface shown below:

// Client discovers API resources and maps API objects

type Client interface {

Discoverer

Mapper

}

// Discoverer discovers remote API

type Discoverer interface {

// Discover returns the discovered API

Discover() (API, error)

}

// Mapper maps the API into topology

type Mapper interface {

// Map returns the API tpology

Map(API) (Top, error)

}

// API is a map of all available API resources

type API interface {

// Resources returns all API resources

Resources() []Resource

// Get returns all API resources matching the given query

Get(...query.Option) ([]Resource, error)

}

// Top is an API topology i.e. the map of Objects

type Top interface {

// Objects returns all objects in the topology

Objects() []Object

// Get queries the topology and returns all matching objects

Get(...query.Option) ([]Object, error)

}

The idea here was having a client which would be able to both scan (Discover) all available resource (groups) in a given API and then Map them into Topology which could then be used for building the graph. The design could probably be made much simpler, but it would do for the time being.

store package

Having the basic api package interfaces in place I proceeded to design the store package which would allow to store and query the object graph.

// Store allows to store and query the graph of API objects

type Store interface {

Graph

// Add adds an api.Object to the store and returns a Node

Add(api.Object, ...Option) (Node, error)

// Link links two nodes and returns the new edge between them

Link(Node, Node, ...Option) (Edge, error)

// Delete deletes an entity from the store

Delete(Entity, ...Option) error

// Query queries the store and returns the results

Query(...query.Option) ([]Entity, error)

}

// Graph is a graph of API objects

type Graph interface {

// Node returns the node with the given ID if it exists

// in the graph, and nil otherwise.

Node(id string) (Node, error)

// Nodes returns all the nodes in the graph.

Nodes() ([]Node, error)

// Edge returns the edge from u to v, with IDs uid and vid,

// if such an edge exists and nil otherwise

Edge(uid, vid string) (Edge, error)

// Subgraph returns a subgraph of the graph starting at Node

// up to the given depth or it returns an error

SubGraph(id string, depth int) (Graph, error)

}

There is so much more to the store package than what’s shown above, but the above should give you a basic idea of what I was aiming for. I imagined, in the future, you could have different graph stores backed by different Graph databases or whatnot, so I wanted to express this in some generic API interface.

With these basic pieces in place I was ready to move to the fun part: hacking on building the Kubernetes API object graph.

kraph module

Finally the kraph module API ties both api and store packages together into a simple interface which should allow for pluggable store and api builders:

// Kraph builds a graph of API objects

type Kraph interface {

// Build builds a graph and returns graph store

Build(api.Client, ...Filter) (store.Graph, error)

// Store returns graph store

Store() store.Store

}

You can find a simple implementation of a “default” kraph in the default.go file to give you an idea about how to tie all the pieces together. The code should be pretty straigtforward:

- Build an API topology using the discovery and mapping client

- Build a graph from the topology and return it

Kubernetes API object graph

As I mentioned earlier choosing Go for the project was primarily driven by the initial idea of building the Kubernetes API object graph and the availability of the feature-rich Go packages to interact with its remote API service. There are a lot of great Go packages in the project I hadn’t used before I started working on this project. The two of those became the core of the k8s package of my project: discovery and dynamic client pacakges.

The discovery client helps with the scanning of all supported Kubernetes API groups including the Kubernetes API extensions such as Custom Defined Resource groups as long as they’re enabled via particular API service flags.

The dynamic client then lets your query the discovered API resources as returned by Kubernetes API for the actual API object instances of each API group [version]. This is an excellent package for building the API object topology – the perfect fit for my use-case and I didn’t even have to build it! Credit to the amazing Kubernetes community and developers who have spent a lot of time designing the project which allows for such a wealth of use-cases, including the one such as mine.

The implementation of API scanning and building the API object graph turned out to be surprisingly simple; I had actually anticipated more “pain”, but it turns out picking the right tools (Go packages) for the job can save you a tremendous amount of time and make your code so much easier to maintain by standing on the shoulders of giants.

All of this code lies in the k8s package, so feel free to have a look. The project also provides the appropriate mock versions of the API resources to make testing simple(r).

graph storage

The next step was to build some storage which would allow for querying the objects and further analysis. I wanted to test the utility of the project by building a simple in-memory engine before I’d go ahead and start storing the object in some graph database. The advantage of the in-memory store would be having the opportunity to dive into the gonum’s various graph packages – this was an excellent opportunity to learn something new again.

The most interesting parts of building the in-memory store were the ones that involved traversing the graph when searching for a particular node or edge or building a subgraph of a given radius size. Gonum provides an excellent API for both BFS (building of which using the Go standard library I had written about a while ago here) and DFS.

When it comes to querying the Graph nodes and the edges I opted for the DFS as it’s more memory efficient than the BFS, whilst I opted for BFS when it came to building a subgraph; this felt a bit more natural to me as the memory storage did not matter when building the subgraph because you will end up storing the subgraph nodes in the memory anyways.

You can find the in-memory store implementation in memory package so feel free to have a look and open a PR if you spot anything incorrect or buggy.

Besides the in-memory store, I started working on a PR to add support for dgraph store, but I have not got around finishing it yet. Hopefully, once the things at work settle a bit I’ll find some more free time to get back to hacking on it! Obviously, if you fancy a good challenge feel free to join the effort :-)

kctl: an experimental tool to build the graph

Once I had the basic implementations of the core packages in place I needed a way to test the Go module on the real-life Kubernetes cluster. I built a simple experimental tool call kctl. It’s extremely experimental and really, it’s mostly just served me as a nice integration testing tool to demonstrate the module works the way it should. You can build the tool using the dedicated Makefile task:

make kctl

At the moment the tool provides a single subcommand with a few command-line options, so it’s not particularly useful, especially given the graph is only stored in memory. One thing which you might find useful is generating the earlier mentioned DOT graph by running the tool as shown below:

./kctl build k8s -format "dot" | dot -Tsvg > cluster.svg && open cluster.svg

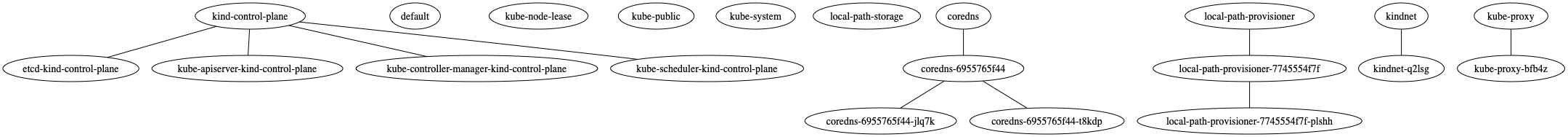

You can then open the generated SVG file in the browser and inspect the API object graph more closely. See the simple example of a small graph below:

Thanks to my friend Alex you can also filter on the kind of objects you would like to have in the graph as by default the tool maps all the available API resources and objects, which in large clusters can lead to massive SVG files that are not particularly useful.

I shall stress again, that the goal of the project was never the kctl tool but rather a generic Go module allowing for building and analyzing the graphs, but maybe with more time and better store support the tool will actually become useful for some tasks.

Future graph avenues

As I was working through the implementation I started thinking about potential future extensions. The decision to avoid tightly coupling the project to Kubernetes and design the core API in the way it is now might turn out to be the key providing the way to many future use-cases some of which I will try to shortly summarise on the following lines.

One of the most obvious use cases could be security. It’s now clear that a lot of the companies started moving their application workloads to Kubernetes (for better or worse) which are hosted by different cloud providers. This creates a bit of friction (in terms of understanding the interactions of all the moving pieces) between the cluster and the environment it is deployed into. The identities of users and services are often split and mapped between the cluster (service accounts) and the cloud identities making it harder for security teams to see which identities have access to which API objects across both cloud and cluster. Having a way of creating a graph union between these disjointed graphs could give the users (or security engineers) much better visibility of the environment and much faster way of identifying potential cybersecurity blast radius.

Besides the identity and access model graphs, one can also imagine another graph union that could expand the “cyber graph” even further down to the application code. There are many vendors who already provide simple APIs for querying the container image layers and highlighting various vulnerabilities that need to be addressed. You can “graph-ify” (horrible word, sorry!) these and connect them to the cluster or wider infrastructure graph, alert on the affected subgraphs of the ever so increasing complex microservices architectures. Imagine a service A has a docker or source code vulnerability, you could then have an alert set up which would map the graph of its interactions to give you a better idea of what other services or parts of your infrastructure could be affected by service A getting compromised.

You can take a similar approach even further, all the way down the infrastructure levels to the raw computing resources (VMs, storage clusters, etc.). You could map them to particular Kubernetes nodes (a node is an API object in Kubernetes, too!) or EC2 instance graphs and by creating a union between the low-level graph with the higher-level graphs you could see the path-to-vulnerability in both directions: raw-compute <-> application service <-> user identity. Also, remember git is also a graph (a specific kind of graph: a tree), so you could join git tree with the cyber graph and get a much better idea about the commits causing the potential vulnerabilities.

This might become even more interesting if AWS manage to revive the original AWS service operator which could open the gate for another subgraph that can be joined with the core graph leading to even more visibility to already an obscure pile of the resources we often lose the track of or have the tools built to monitor which don’t quite fit the bill. AWS operator processes CRD resources that help to manage the AWS resources. Voila, the kraph project will help you map the AWS resources by scraping the K8s resrouces and store them somewhere for querying.

Finally, another use case could be to connect the graph to your log analysis tool. Imagine each node in the graph having a unique ID. You could then analyze the logs, which would reference the particular object in the log entry via its unique ID. By extracting the data from the log and the ID of the object creating the log entry you could walk the “infra graph” and discover potential vulnerabilities or anomalies which would be connected to not only the infrastructure itself but to wider organization (remember you’d have an identity [sub]graph as part of the whole graph). You could, in fact, run this analysis as a simple Lambda function.

I could think of many other use case but I’m going to stop hear and let your imagination let loose. If you come up with something interesting, leave a comment below.

Conclusion

This blog post introduced the kraph Go module and discussed both its high-level design and potential future extensions. This is an early a.k.a. an extremely experimental version of the project which I wanted to talk about to see what the people think of it and whether the ideas I presented here are not simply “delusion grandeur”.

To take the project to the next level we would need wide support for graph databases which would allow for rich queries and graph analysis. As I said earlier I started working the dgraph store implementation, but I need to finish it, though I feel like dgraph is not quite there with regards to the features I would like it to have, so I might not end up merging the PR into the project if it proves that the implementation does not tick all of my requirements.

Besides, I particularly love the idea of the serverless graph, where the scan of the APIs would be done via some lambda invocation and the graph would be stored in some serverless graph DB such as AWS Neptune which provides a wide set of features for building and querying the graphs. This would free people from running the servers and in fact, given the project is written in Go it should allow for easier integration with Go function invocation in the cloud.

That’s it from me today, feel free to open a PR with your suggestions or leave a comment below if you find this blog post/project interesting. I shall do my best to reply to it!